Maximilian Beck

Working on efficient Large Language Models architectures.

ELLIS PhD Student at Johannes Kepler University Linz, Institute for Machine Learning

I am a final-year PhD student at the Institute for Machine Learning at Johannes Kepler University (JKU) Linz, advised by Sepp Hochreiter, and a PhD Researcher at NXAI.

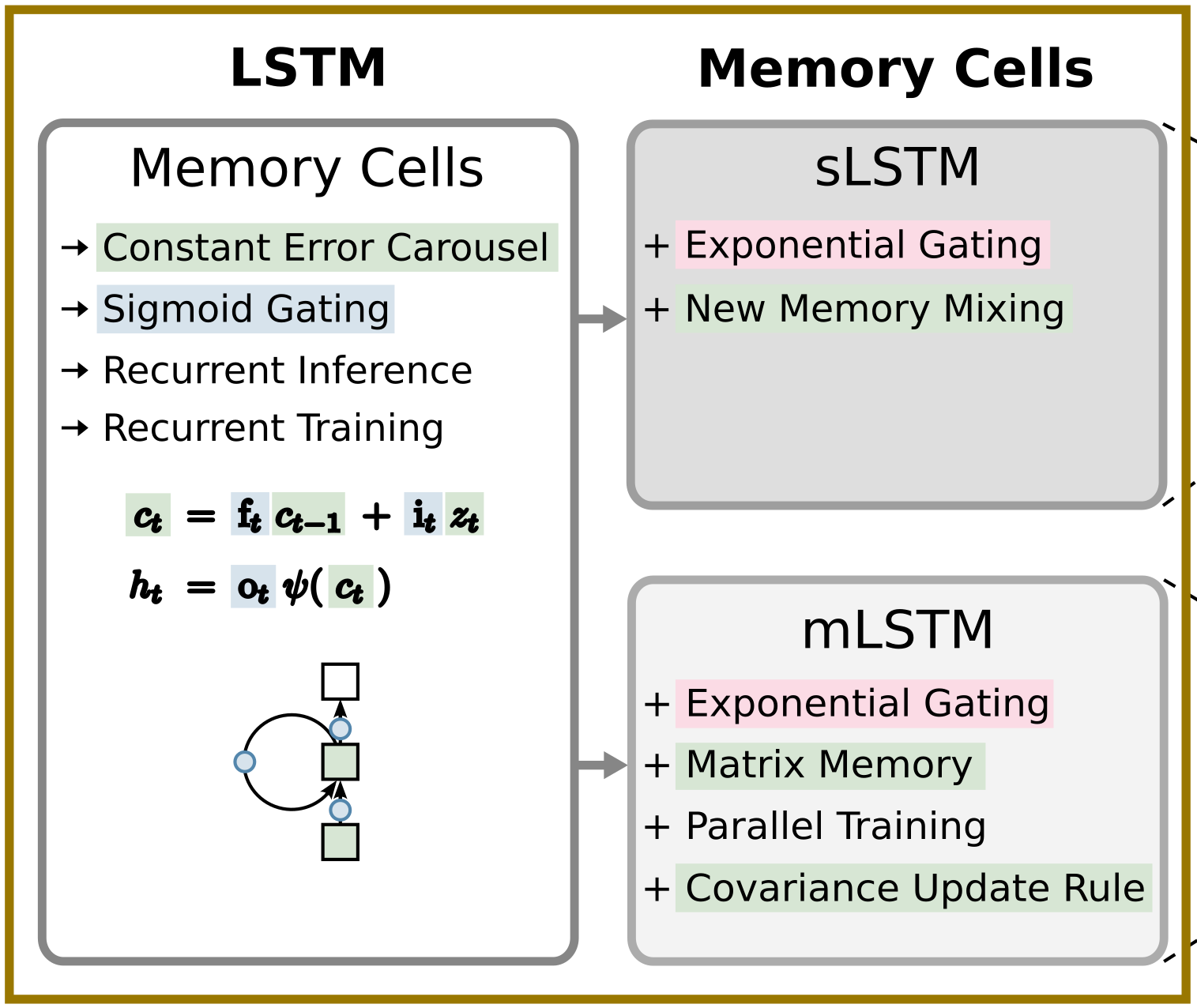

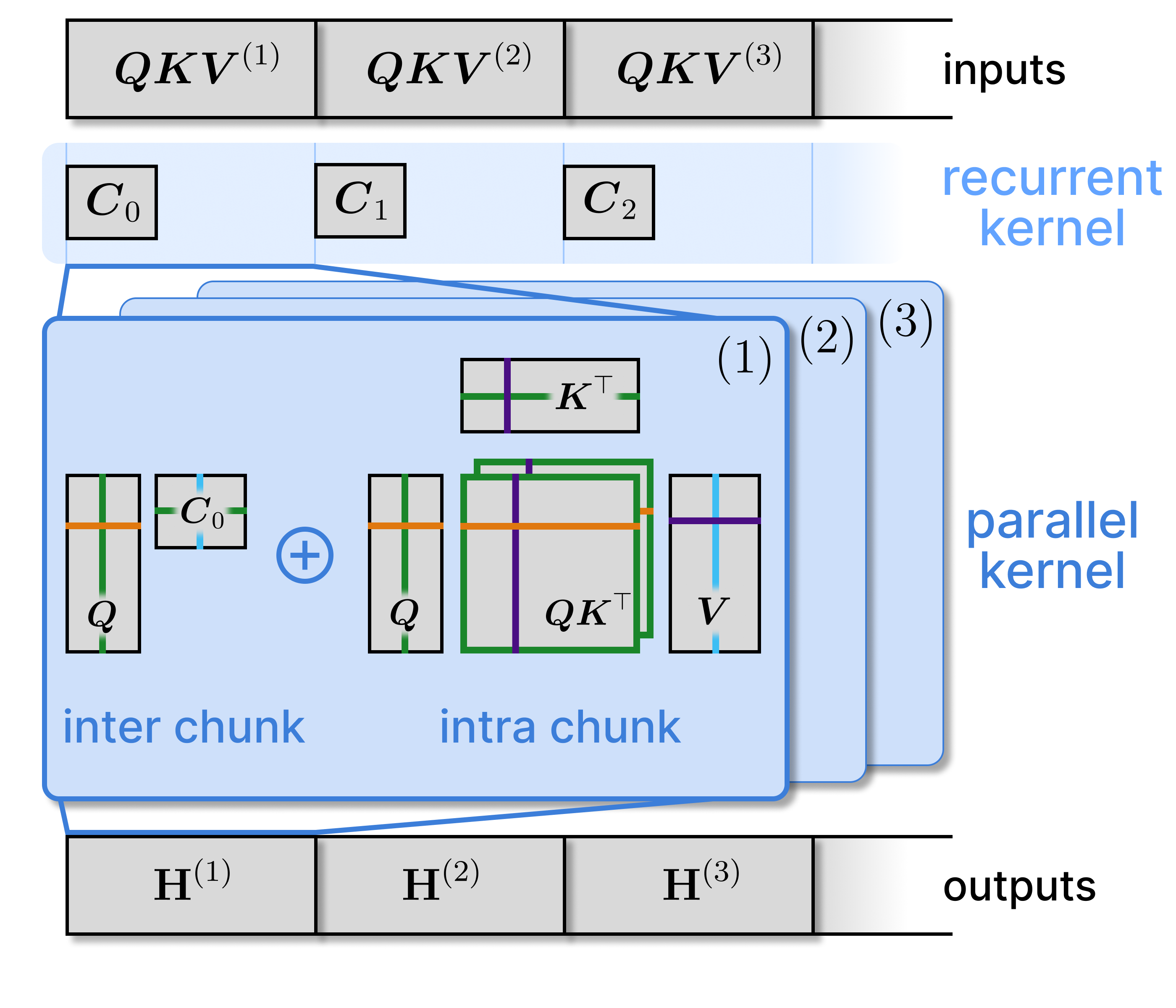

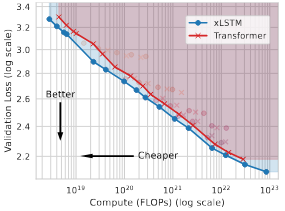

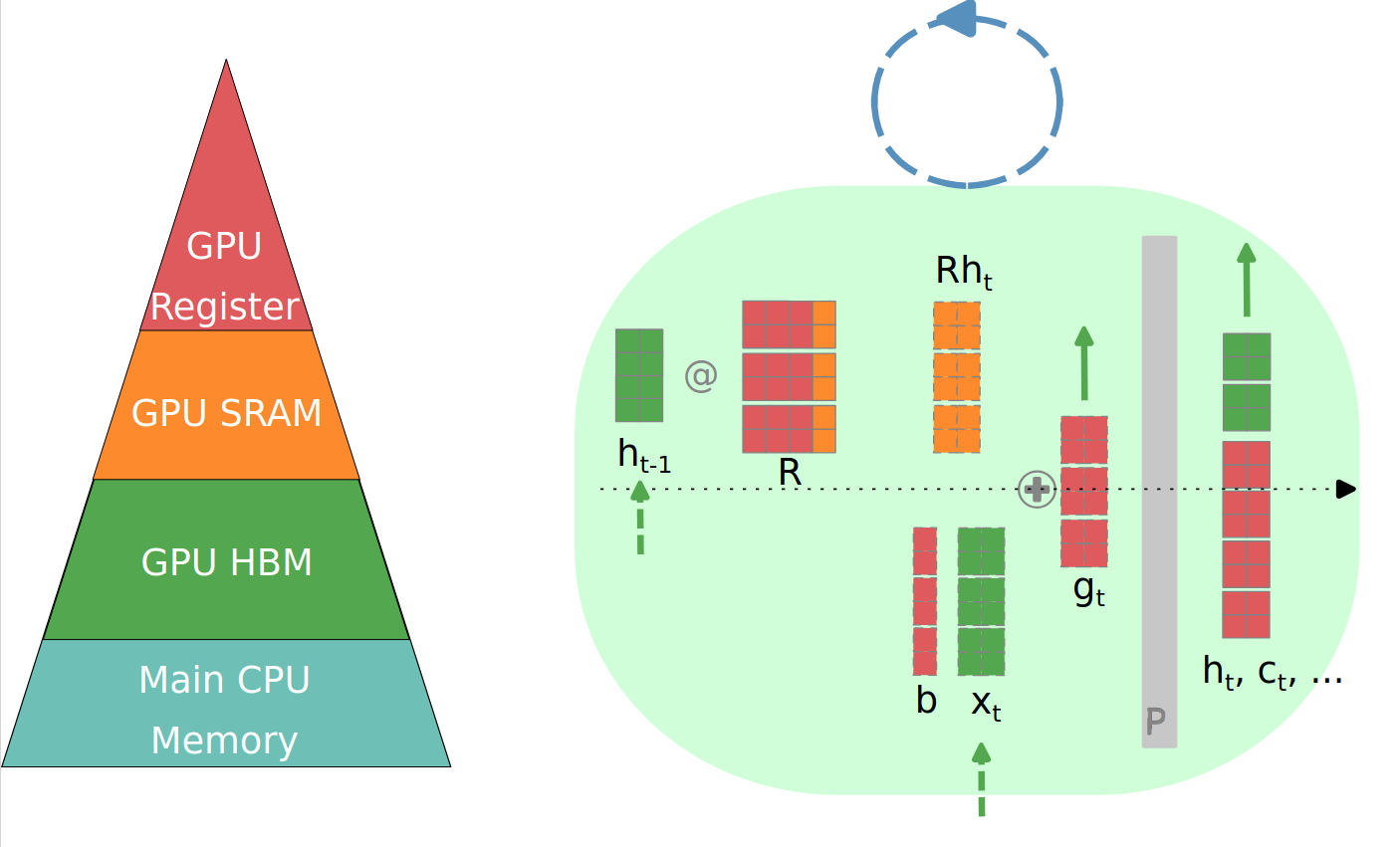

My research focuses on efficient architectures for Large Language Models (LLMs) with sub-quadratic complexity. I’m particularly interested in optimizing training and inference efficiency, understanding scaling laws, and exploring the application of these architectures in domains such as computer vision and robotics.

From May to October 2025, I was interning at Meta FAIR in the CodeGen team. During my internship I worked on LLMs for code generation and understanding. Specifically, I explored architectures for code world models, evaluated code execution prediction capabilities and worked on neural debuggers.

Before starting my PhD, I completed both my Bachelor’s and Master’s studies in Mechatronics and Information Technology at the Karlsruhe Institute of Technology (KIT), with a focus on Control Theory, graduating in 2017 and 2021.

news

| Jan 22, 2026 | Our two papers xLSTM Scaling Laws: Competitive Performance with Linear Time-Complexity and Short window attention enables long-term memorization are accepted at ICLR 2026 in Rio de Janeiro! |

|---|---|

| Oct 24, 2025 | I have successfully finished my internship at Meta FAIR with Jonas Gehring. I am happy to have contributed and co-authored two papers, with one more in preparation - Stay tuned! |

| Oct 03, 2025 | We published our paper xLSTM Scaling Laws: Competitive Performance with Linear Time-Complexity on arxiv.

|

| Sep 06, 2025 | I have handed in my PhD pre-defense report for my PhD Thesis: xLSTM: Recurrent Neural Network Architectures for Scalable and Efficient Large Language Models |

| May 12, 2025 | I started a summer internship at Meta FAIR in Paris working on code Large Language Models. |